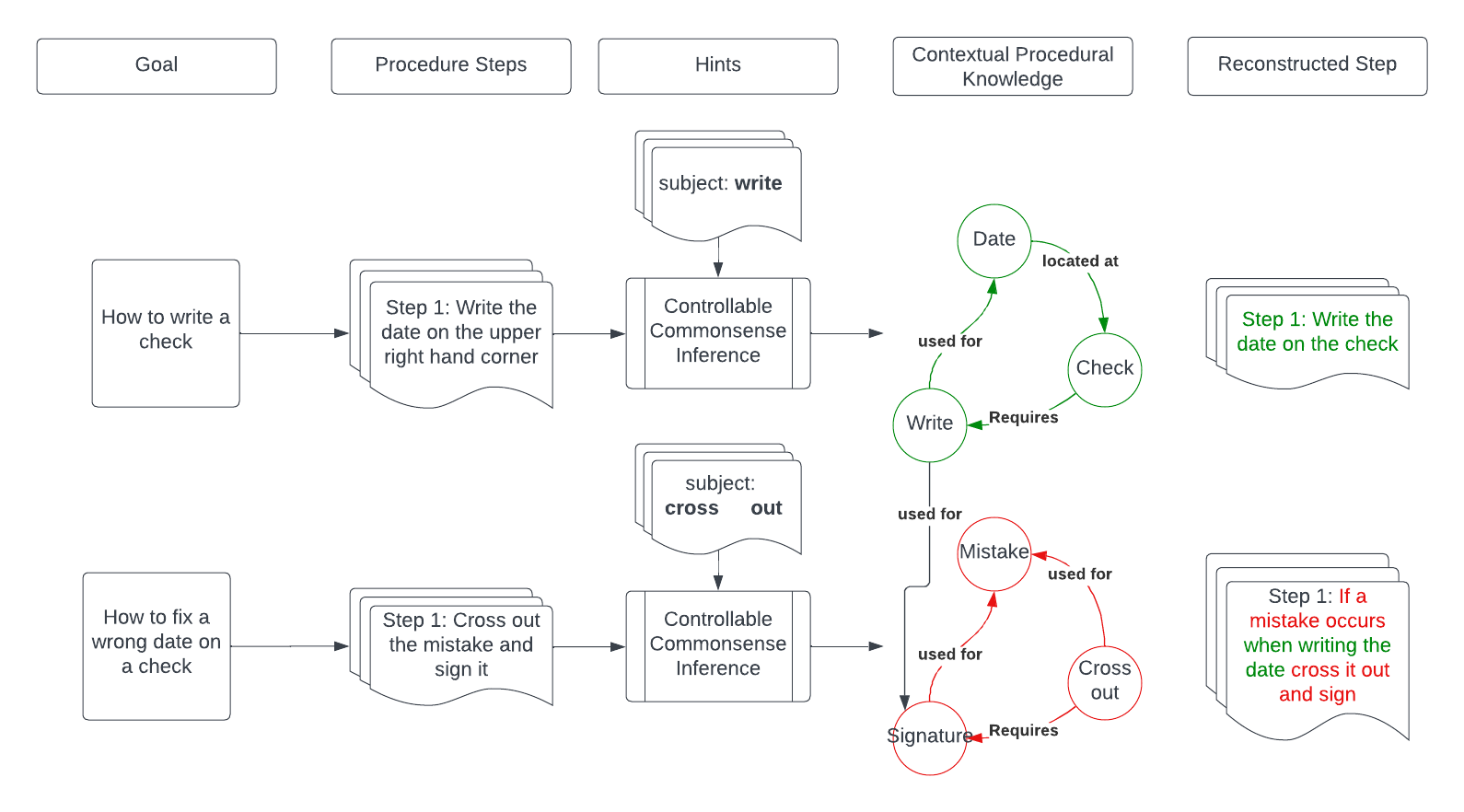

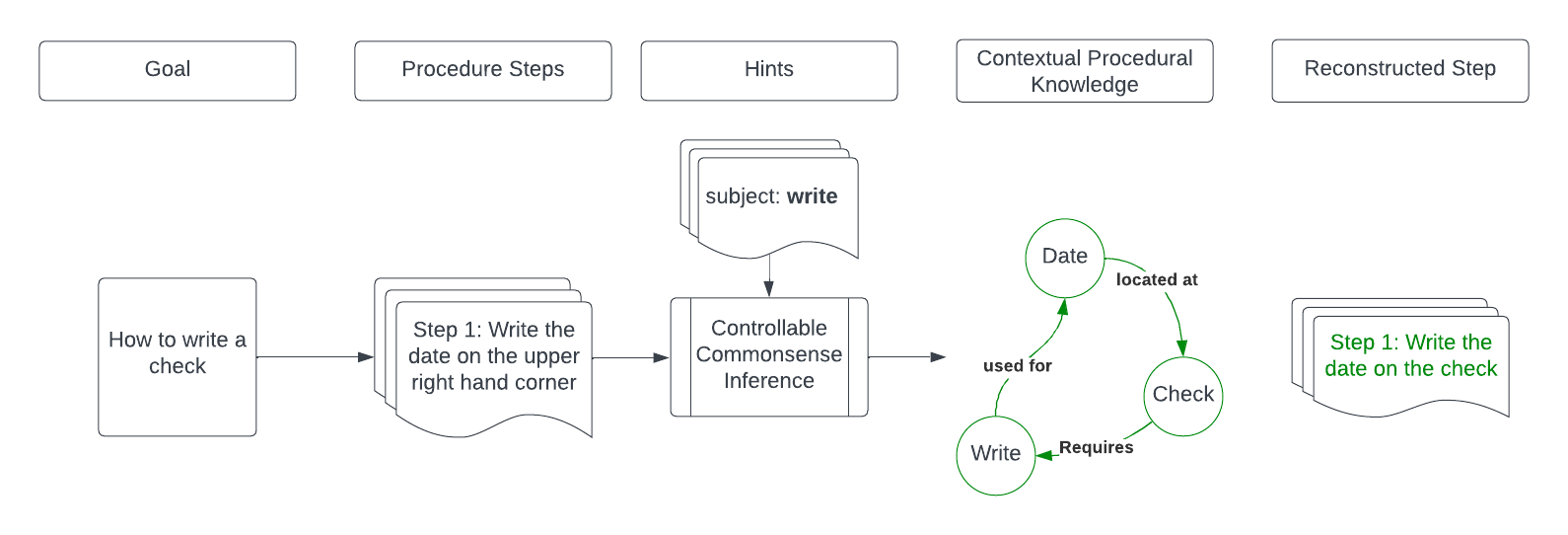

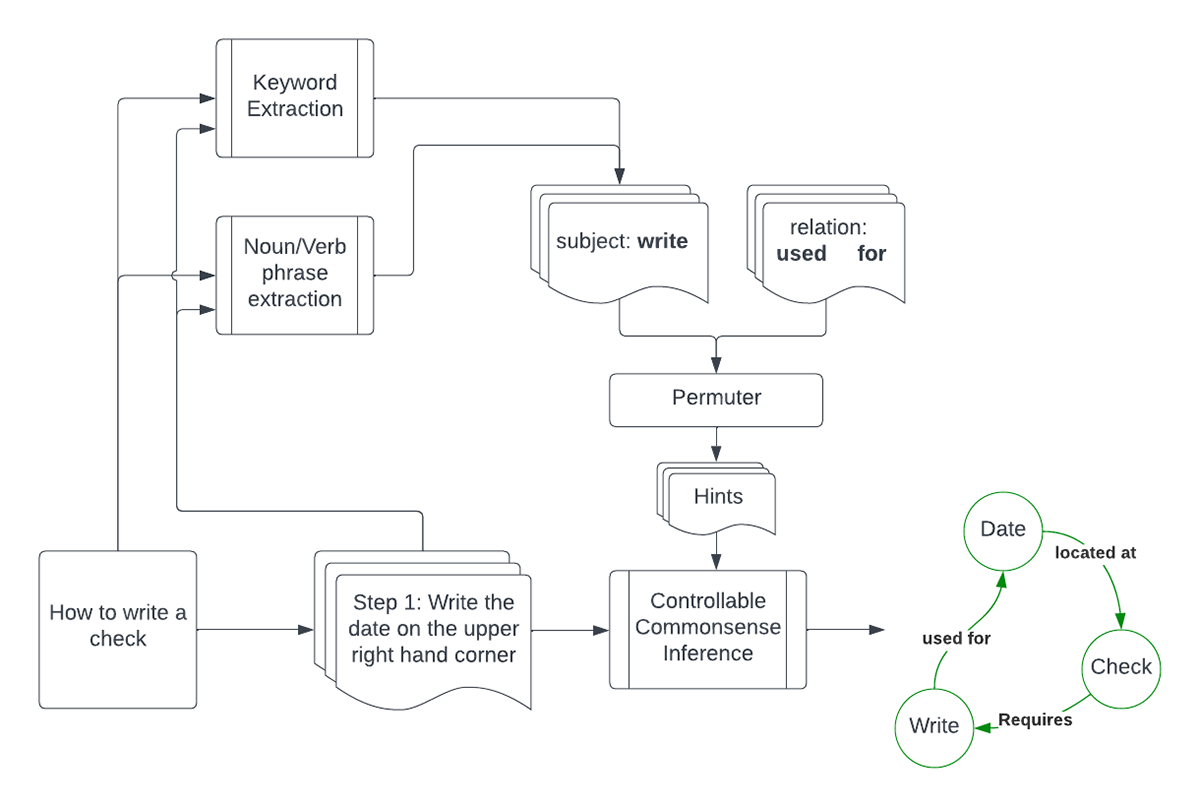

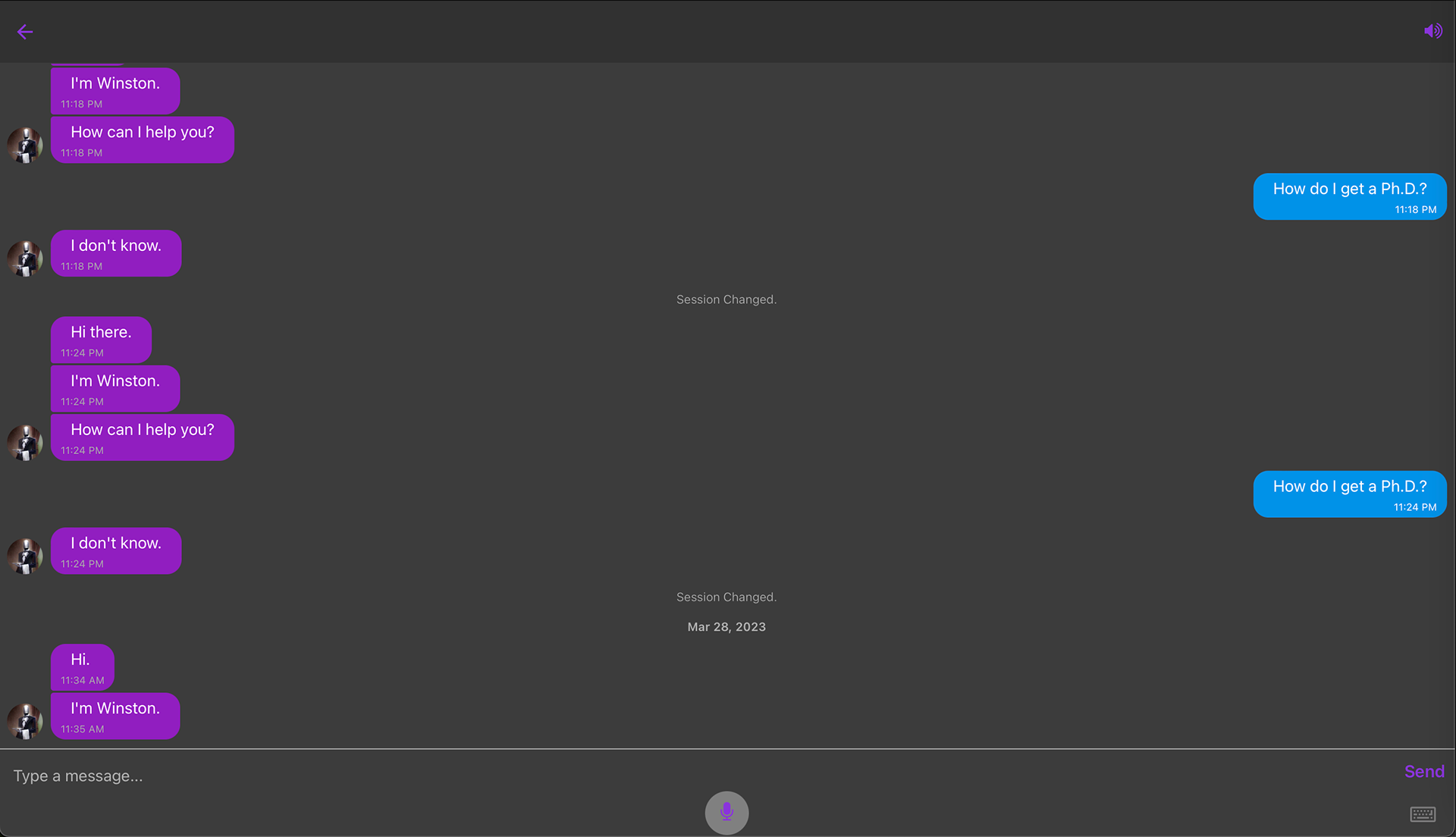

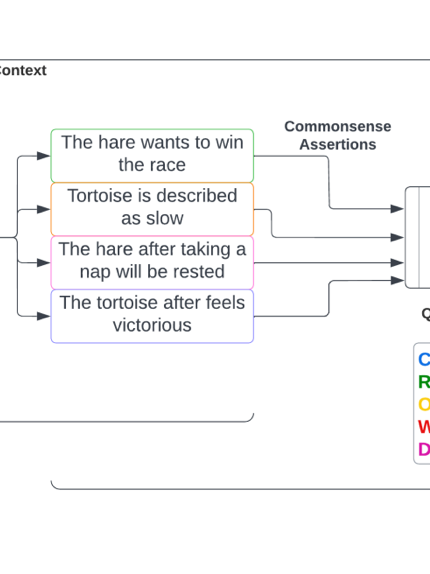

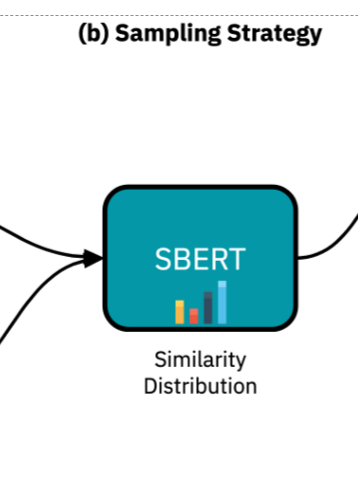

The idea originated from the thought that we had self driving cars, rockets that land by themselves, but we don't have any way to answer How-to questions. I developed a proof of concept system that could, (without what are now very large language models!), from a relevant website, extract a contextual knowledge graph from it, and use a subset of this graph to generate the steps for a procedure. These steps would then be handed off to a system that executed a conversational pattern (namely the Extended Telling from Dr. Robert Moore), to be relayed to a user. Below is an example

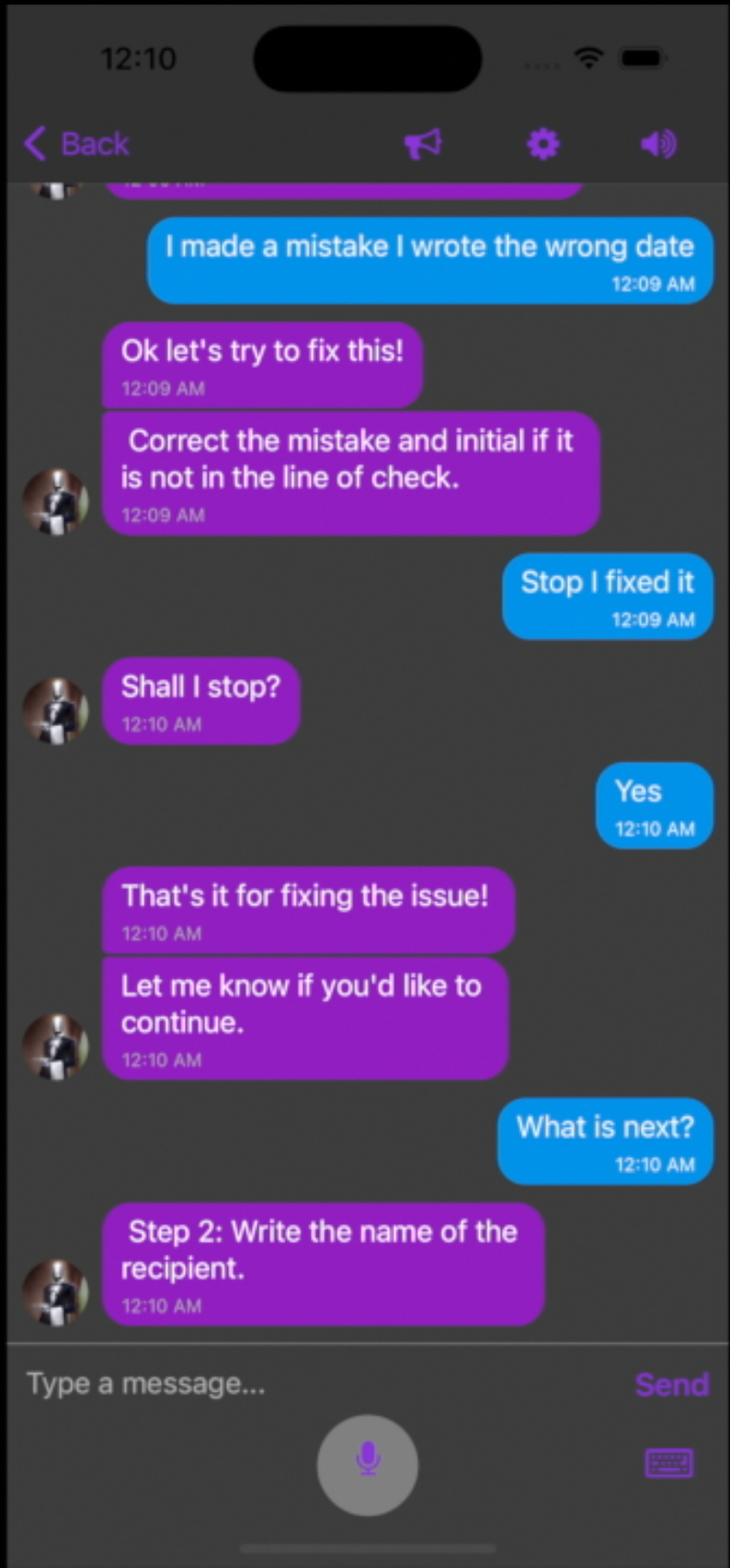

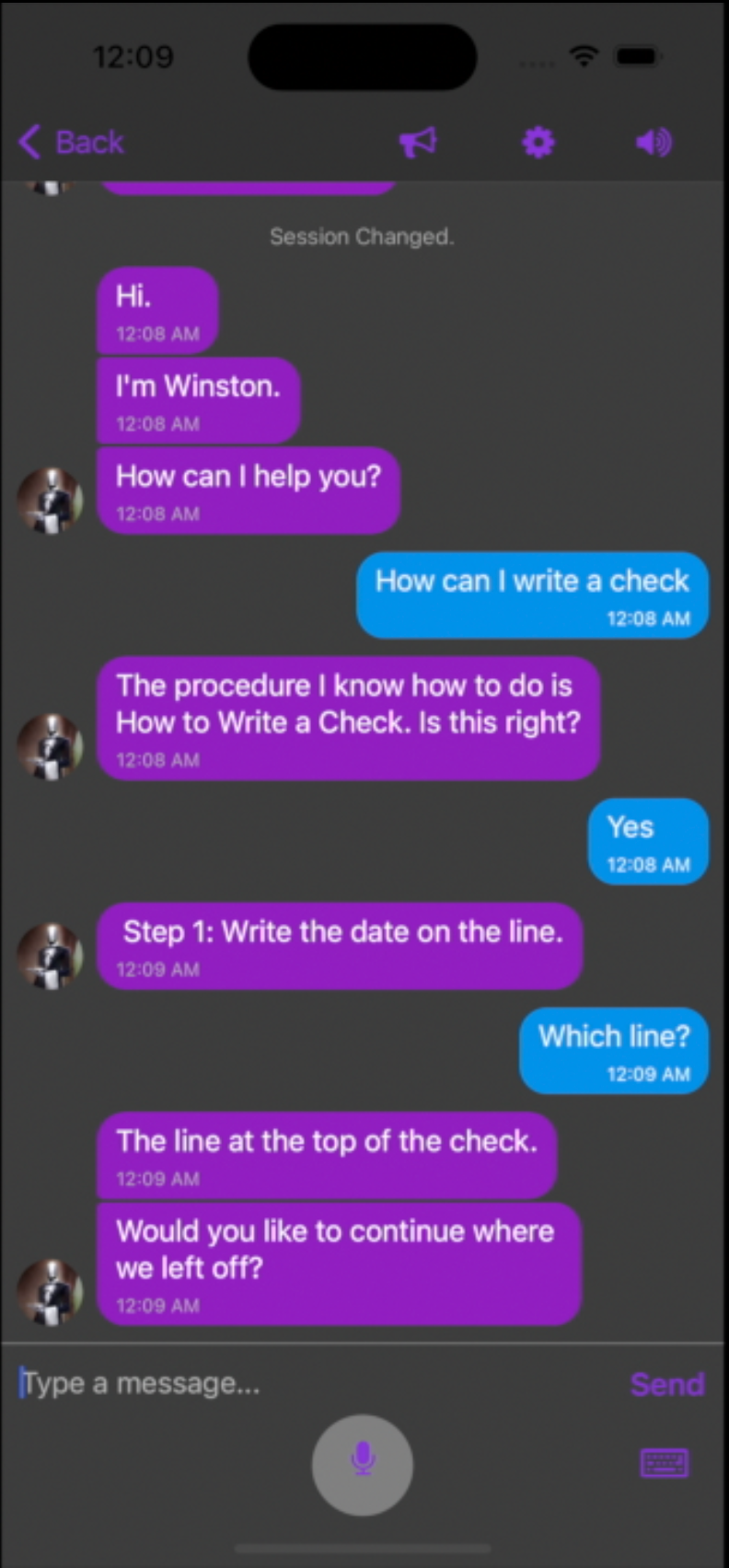

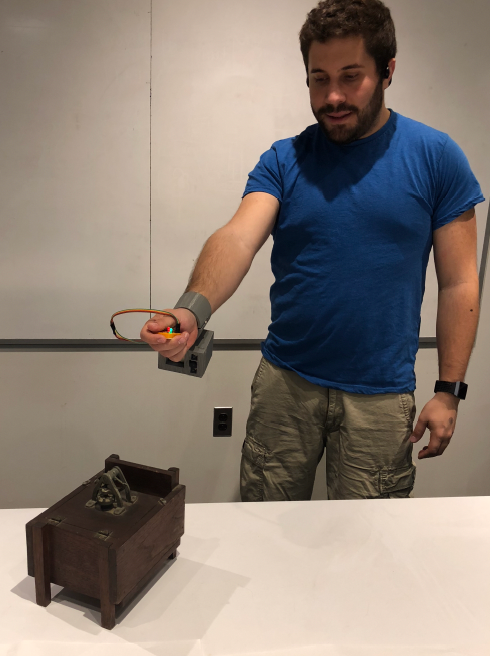

The cool thing about this system is that since it worked based on a knowledge graph, it could add more information in case there was an issue in the procedure. Here is the full thesis document. Below are some images of the UI that was developed for this.