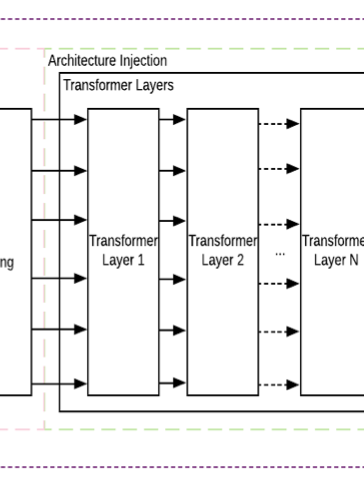

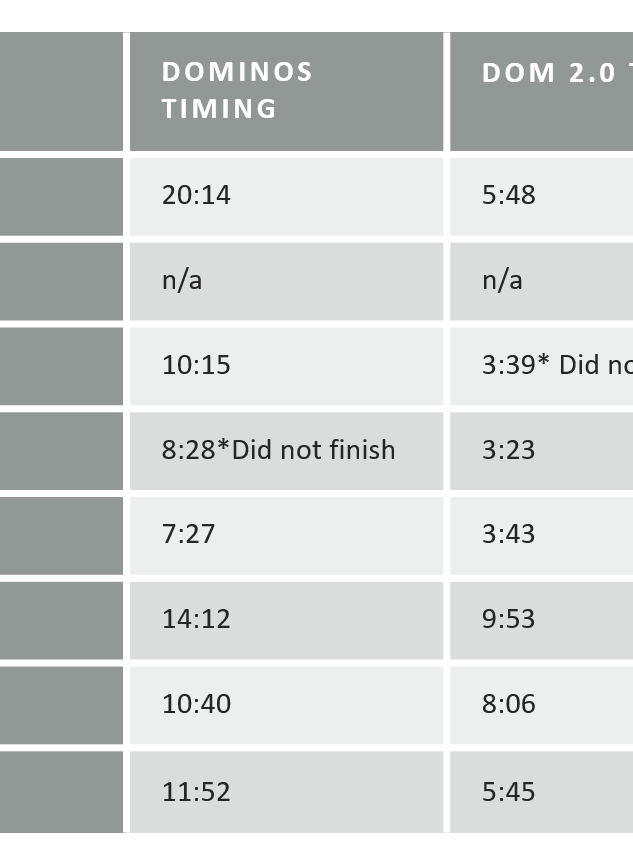

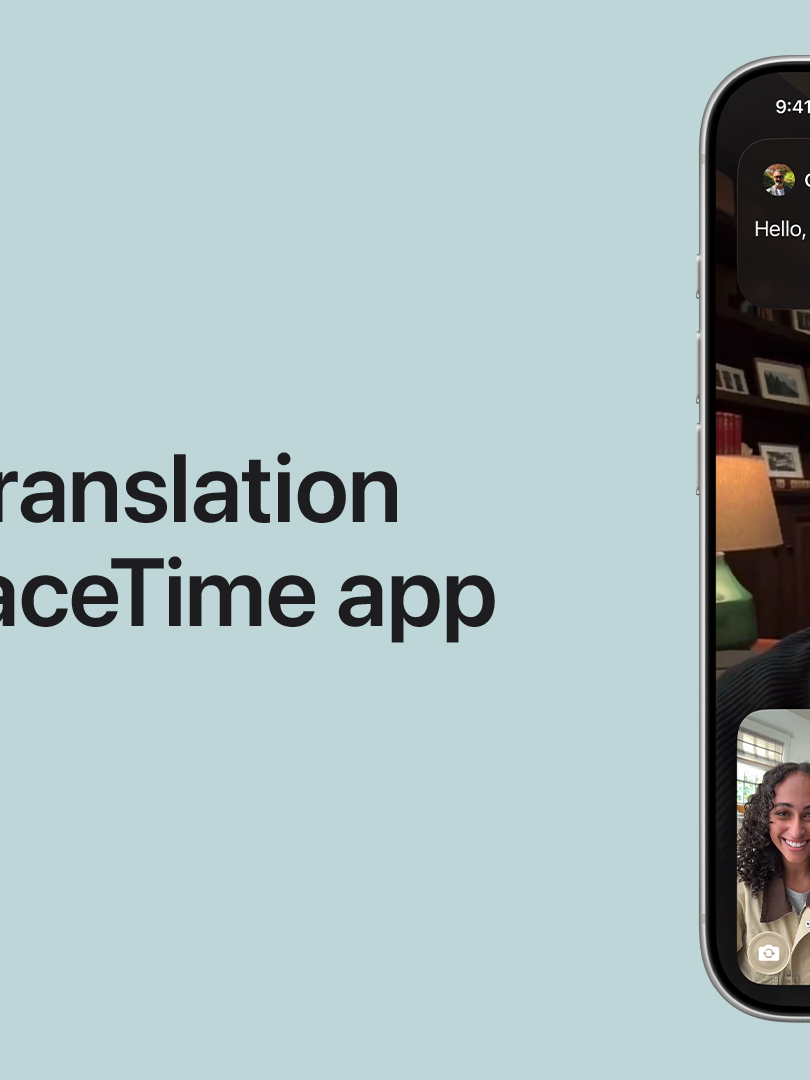

While at Apple, I have worked on both technical and design explorations, centering around conversational use of LLMs, LLMs for Accessibility, LLMs for iOS devices, tooling for LLM interactive experiences, LLMs for Health, and LLMs for UIs, and others. Along the way I have developed tools and prototypes in iOS, Mac Os, Watch, VisionOS, and have worked on tooling for experiences in Linux. Some of the prototypes I have developed we have validated them with internal user testing, and many were developed through user centric design, involving the users of the interactions early on to shape and refine them. Some of the work that I have done has helped influence production systems (see Translate for Watch and FaceTime) and others I am helping develop them for production for future releases. Typically I work with designers to craft experiences and bring them to life. If needed, I also help provide the technical implementation for these experiences and guide engineering work on features/interactions.

Some technologies I have used are: llama.cpp, CoreML, Python, Flask, Xcode, Claude Code, Huggingface Transformers, node.js.